Research Overview

Human-Robot Shared Control for Medical Robotics:

I proposed the concept of implicit human-robot shared control, which means that the human operator can conduct operations with the robot through an intelligent interface. The key components of master-slave mapping are explored, while an adaptive mechanism is incorporated into the control framework to improve the efficiency of teleoperation. Context awareness and human intention recognition are explored to implement an adaptive motion scaling framework, which enhances surgical operation efficiency. A hybrid interface for robot control is proposed to enable the combination of the advantages of different mapping strategies. For dexterous micromanipulation at the cellular level, microrobots with complex shape for the implementation of out-of-plane control is investigated, which can serve as a dexterous tool for indirect micro/nano-scale object manipulation. Machine learning-based vision tracking techniques for depth estimation and pose estimation are developed for microrobot monitoring during optical manipulation. Two control strategies for distributed force control of optical microrobots were developed and verified.

Affordable Medical Robotic Systems for Surgical Training:

This research topic aims to reduce the expenses of using commercial robotic surgery systems, thereby making advanced surgical training more accessible to trainees. A key feature of these systems is the integration of haptic feedback and force-sensing technologies, which simulate the tactile interaction between surgical instruments and human tissues. This enhancement not only adds a layer of realism to the training experience but also aids in refining the tactile skills of future surgeons. Furthermore, these training systems are designed for remote operation through internet control, broadening the scope of training by allowing accessibility from various locations. This remote functionality is finely tuned to minimize latency issues, ensuring that vision feedback and force data transmission remain optimally efficient during remote operations.

08

Human-Robot Shared Control for Surgical Robot

Based on Context-Aware Sim-to-Real Adaptation

Dandan Zhang; Zicong Wu; Junhong Chen; Ruiqi Zhu; Adnan Munawar; Bo Xiao; Yuan Guan

Hang Su; Yao Guo; Gregory Fischer; Benny Lo; Guang-Zhong Yang

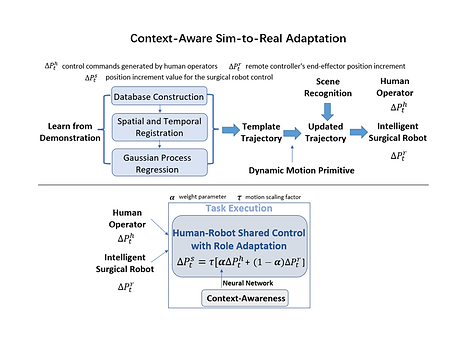

Human-robot shared control, which integrates the advantages of both humans and robots, is an effective approach to facilitate efficient surgical operations.

Learning from demonstration (LfD) techniques can be used to automate some of the surgical subtasks for the construction of the shared control mechanism. However, a sufficient amount of data is required for the robot to learn the manoeuvres. Using a surgical simulator to collect data is a less resource-demanding approach. With sim-to-real adaptation, the manoeuvres learned from a simulator can be transferred to a physical robot. To this end, we propose a sim-to-real adaptation method to construct a human-robot shared control framework for robotic surgery.

In this paper, a desired trajectory is generated from a simulator using LfD method, while dynamic motion primitives (DMP) is used to transfer the desired trajectory from the simulator to the physical robotic platform. Moreover, a role adaptation mechanism is developed such that the robot can adjust its role according to the surgical operation contexts predicted by a neural network model.

The effectiveness of the proposed framework is validated on the da Vinci Research Kit (dVRK). Results of the user studies indicated that with the adaptive human-robot shared control framework, the path length of the remote controller, the total clutching number and the task completion time can be reduced significantly. The proposed method outperformed the traditional manual control via teleoperation.

07

A Self-Adaptive Motion Scaling Framework

for Surgical Robot Remote Control

Dandan Zhang; Bo Xiao; Baoru Huang; Lin Zhang; Jindong Liu; Guang-Zhong Yang

Master–slave control is a common form of human–robot interaction for robotic surgery. To ensure seamless and intuitive control, a mechanism of self-adaptive motion scaling during teleoperation is proposed in this letter. The operator can retain precise control when conducting delicate or complex manipulation, while the movement to a remote target is accelerated via adaptive motion scaling. The proposed framework consists of three components: 1) situation awareness, 2) skill level awareness, and 3) task awareness. The self-adaptive motion scaling ratio allows the operators to perform surgical tasks with high efficiency, forgoing the need of frequent clutching and instrument repositioning. The proposed framework has been verified on a da Vinci Research Kit to assess its usability and robustness. An in-house database is constructed for offline model training and parameter estimation, including both the kinematic data obtained from the robot and visual cues captured through the endoscope. Detailed user studies indicate that a suitable motion-scaling ratio can be obtained and adjusted online. The overall performance of the operators in terms of control efficiency and task completion is significantly improved with the proposed framework.

Full paper link:

06

Deep Reinforcement Learning Based

Semi-Autonomous Control for Robotic Surgery

In this paper, we propose a semi-autonomous control framework for robotic surgery and evaluate this framework in a simulated environment. We applied deep reinforcement learning methods to train an agent for autonomous control, which includes simple but repetitive manoeuvres. Compared to learning from demonstration, deep reinforcement learning can learn a new policy by altering the goal via modifying the reward function instead of collecting new dataset for a new goal. In addition to the autonomous control, we also created a handheld controller for manual precision control. The user can seamlessly switch to manual control at any time by moving the handheld controller. Finally, our method was evaluated in a customized simulated environment to demonstrate its efficiency compared to full manual control.

Full paper link:

Ruiqi Zhu; Dandan Zhang; Benny Lo

05

Supervised Semi-Autonomous Control for Surgical Robot

Based on Bayesian Optimization

Supervisory control functions should be included to ensure flexibility and safety during the autonomous control phase. This paper presents a haptic rendering interface to enable supervised semi-autonomous control for a surgical robot. Bayesian optimization is used to tune user-specific parameters during the surgical training process. User studies were conducted on a customized simulator for validation. Detailed comparisons are made between with and without the supervised semi-autonomous control mode in terms of the number of clutching events, task completion time, master robot end-effector trajectory and average control speed of the slave robot. The effectiveness of the Bayesian optimization is also evaluated, demonstrating that the optimized parameters can significantly improve users' performance. Results indicate that the proposed control method can reduce the operator's workload and enhance operation efficiency.

Full paper link:

Junhong Chen#, Dandan Zhang#; Benny Lo; Guang-Zhong Yang

04

A microsurgical robot research platform for robot-assisted

microsurgery research and training

A microsurgical robot research platform (MRRP) is introduced in this paper. The hardware system includes a slave robot with bimanual manipulators, two master controllers and a vision system. It is flexible to support multiple microsurgical tools. The software architecture is developed based on the robot operating system, which is extensible at high-level control. The selection of master–slave mapping strategy was explored, while comparisons were made between different interfaces.

Experimental verification was conducted based on two microsurgical tasks for training evaluation, i.e. trajectory following and targeting. User study results indicated that the proposed hybrid interface is more effective than the traditional approach in terms of frequency of clutching, task completion time and ease of control. Results indicated that the MRRP can be utilized for microsurgical skills training, since motion kinematic data and vision data can provide objective means of verification and scoring. The proposed system can further be used for verifying high-level control algorithms and task automation for RAMS research.

Full paper link:

https://link.springer.com/article/10.1007/s11548-019-02074-1

Dandan Zhang, Junhong Chen, Wei Li,Guang-Zhong Yang

03

TIMS: A Tactile Internet-Based Micromanipulation System with Haptic Guidance for Surgical Training

Jialin Lin, Xiaoqing Guo, Wen Fan, Wei Li, Yuanyi Wang, Jiaming Liang, Weiru Liu, Lei Wei, Dandan Zhang

Full Paper Link: https://arxiv.org/abs/2303.03566

Microsurgery involves the dexterous manipulation of delicate tissue or fragile structures such as small blood vessels, nerves, etc., under a microscope. To address the limitation of imprecise manipulation of human hands, robotic systems have been developed to assist surgeons in performing complex microsurgical tasks with greater precision and safety. However, the steep learning curve for robot-assisted microsurgery (RAMS) and the shortage of well-trained surgeons pose significant challenges to the widespread adoption of RAMS. Therefore, the development of a versatile training system for RAMS is necessary, which can bring tangible benefits to both surgeons and patients.

In this paper, we present a Tactile Internet-Based Micromanipulation System (TIMS) based on a ROS-Django web-based architecture for microsurgical training. This system can provide tactile feedback to operators via a wearable tactile display (WTD), while real-time data is transmitted through the internet via a ROS-Django framework. In addition, TIMS integrates haptic guidance to `guide' the trainees to follow a desired trajectory provided by expert surgeons. Learning from demonstration based on Gaussian Process Regression (GPR) was used to generate the desired trajectory. User studies were also conducted to verify the effectiveness of our proposed TIMS, comparing users' performance with and without tactile feedback and/or haptic guidance.

02

Hamlyn CRM: A Compact Master Manipulator for

Surgical Robot Remote Control

Compact master manipulators have inherent advantages since they can have practical deployment within the general surgical environments easily and bring benefits to surgical training. To assess the advantages of compact master manipulators for surgical skills training and the performance of general robot-assisted surgical tasks, Hamlyn Compact Robotic Master (Hamlyn CRM), is built up and evaluated in this paper.

A compact structure for the master manipulator is proposed. A novel sensing system is designed while stable real-time motion tracking can be realized by fusing the information from multiple sensors. User studies were conducted based on a ring transfer task and a needle passing task to explore a suitable mapping strategy for the compact master manipulator to control a surgical robot remotely. The overall usability of the Hamlyn CRM is verified based on the da Vinci Research Kit (dVRK). The master manipulators of the dVRK control console are used as the reference.

Motion tracking experiments verified that the proposed system can track the operators' hand motion precisely. As for the master-slave mapping strategy, user studies proved that the combination of the position relative mapping mode and the orientation absolute mapping mode is suitable for Robot-Assisted Minimally Invasive Surgery (RAMIS), while key parameters for mapping are selected. Results indicated that the Hamlyn CRM can serve as a compact master manipulator for surgical training, and has potential applications for RAMIS.

Full paper link:

https://link.springer.com/article/10.1007/s11548-019-02112-y

01

A Handheld Master Controller for Robot-Assisted Microsurgery

Dandan Zhang; Yao Guo; Junhong Chen; Jindong Liu; Guang-Zhong Yang

Accurate master-slave control is important for Robot-Assisted Microsurgery (RAMS). This paper presents a handheld master controller for the operation and training of RAMS. A 9-axis Inertial Measure Unit (IMU) and a micro camera are utilized to form the sensing system for the handheld controller. A new hybrid marker pattern is designed to achieve reliable visual tracking, which integrated QR codes, Aruco markers, and chessboard vertices. Real-time multi-sensor fusion is implemented to further improve the tracking accuracy. The proposed handheld controller has been verified on an in-house microsurgical robot to assess its usability and robustness. User studies were conducted based on a trajectory following task, which indicated that the proposed handheld controller had comparable performance with the Phantom Omni, demonstrating its potential applications in microsurgical robot control and training.

Full paper link:

Our Work

Here shows our work related to AI for Health & AI for Science.

We collaborate with biologists, chemists, and clinicians for highly interdisciplinary research.

Active Research Directions:

AI for Surgical Data Science

AI for Robotic Surgery

01

Automatic Microsurgical Skill Assessment Based on

Cross-Domain Transfer Learning

The assessment of microsurgical skills for Robot-Assisted Microsurgery (RAMS) still relies primarily on subjective observations and expert opinions. A general and automated evaluation method is desirable. Deep neural networks can be used for skill assessment through raw kinematic data, which has the advantages of being objective and efficient. However, one of the major issues of deep learning for the analysis of surgical skills is that it requires a large database to train the desired model, and the training process can be time-consuming. This paper presents a transfer learning scheme for training a model with limited RAMS datasets for microsurgical skill assessment.

An in-house Microsurgical Robot Research Platform Database (MRRPD) is built with data collected from a microsurgical robot research platform (MRRP). It is used to verify the proposed cross-domain transfer learning for RAMS skill level assessment. The model is fine-tuned after training with the data obtained from the MRRP. Moreover, microsurgical tool tracking is developed to provide visual feedback while task-specific metrics and the other general evaluation metrics are provided to the operator as a reference. The method proposed has been shown to offer the potential to guide the operator to achieve a higher level of skills for microsurgical operation.

Full paper link:

Dandan Zhang; Zicong Wu; Junhong Chen; Anzhu Gao; Xu Chen; Peichao Li;

Zhaoyang Wang; Guitao Yang; Benny Lo; Guang-Zhong Yang

02

Surgical Gesture Recognition Based on Bidirectional

Multi-Layer Independently RNN with Explainable Spatial Feature Extraction

Dandan Zhang; Ruoxi Wang; Benny Lo

In this work, we aim to develop an effective surgical gesture recognition approach with an explainable feature extraction process. A Bidirectional Multi-Layer independently RNN (BML-indRNN) model is proposed in this paper, while spatial feature extraction is implemented via fine-tuning of a Deep Convolutional Neural Network (DCNN) model constructed based on the VGG architecture. To eliminate the black-box effects of DCNN, Gradient-weighted Class Activation Mapping (Grad-CAM) is employed. It can provide explainable results by showing the regions of the surgical images that have a strong relationship with the surgical gesture classification results.

The proposed method was evaluated based on the suturing task with data obtained from the public available JIGSAWS database. Comparative studies were conducted to verify the proposed framework. Results indicated that the testing accuracy for the suturing task based on our proposed method is 87.13%, which outperforms most of the state-of-the-art algorithms.

Full paper link:

Ongoing Projects

-

Machine Learning-Based Adaptive Human-Robot Shared Control

-

Haptic Guidance for Dexterous Manipulation

-

Reinforcement Learning for Human-in-the-Loop Control

Summary

03

Real-time Surgical Environment Enhancement for

Robot-Assisted Minimally Invasive Surgery Based on Super-Resolution

Ruoxi Wang, Dandan Zhang, Qingbiao Li, Xiao-Yun Zhou, Benny Lo

In Robot-Assisted Minimally Invasive Surgery (RAMIS), a camera assistant is normally required to control the position and the zooming ratio of the laparoscope, following the surgeon's instructions. However, moving the laparoscope frequently may lead to unstable and suboptimal views, while the adjustment of zooming ratio may interrupt the workflow of the surgical operation. To this end, we propose a multi-scale Generative Adversarial Network (GAN)-based video super-resolution method to construct a framework for automatic zooming ratio adjustment. It can provide automatic real-time zooming for high-quality visualization of the Region of Interest (ROI) during the surgical operation. In the pipeline of the framework, the Kernel Correlation Filter (KCF) tracker is used for tracking the tips of the surgical tools, while the Semi-Global Block Matching (SGBM)-based depth estimation and Recurrent Neural Network (RNN)-based context-awareness are employed to determine the upscaling ratio for zooming. The framework is validated with the JIGSAW dataset and Hamlyn Centre Laparoscopic/Endoscopic Video Datasets, with results demonstrating its practicability.

Full paper link: