Human-Robot Interaction

Research Overview:

Our research in Human–Robot Interaction (HRI) focuses on designing intelligent, intuitive, and trustworthy interfaces that enable humans and robots to work together effectively in complex, dynamic, and safety-critical environments. We combine advances in digital technologies, interaction design, and shared autonomy to create collaborative robotic systems that are both powerful and human-centred.

We focus on three areas: (1) advanced digital technologies (AR/VR/MR and cloud robotics) to enhance situational awareness and training, (2) multimodal interaction interfaces (gesture, visual and haptic feedback) for natural communication, and (3) human–robot shared control to balance autonomy and human intent for safe, precise operation.

Research Theme 1: Advanced Digital Technology

We leverage AR/VR/MR and cloud computing to improve human–robot collaboration and operation in complex environments. Augmented Reality (AR) overlays task-critical information and provides a shared view so robots can communicate intent and highlight objects, enabling more predictable teamwork. Virtual Reality (VR) supports immersive training and rehearsal (e.g., for surgical practice). Mixed Reality (MR) blends virtual and physical elements for interactive design, simulation, and teleoperation in remote or hazardous settings using real-time digital representations of the robot’s workspace. Cloud-based robotics enables experience sharing, remote monitoring/updates, and on-demand compute resources, allowing robots to scale their learning and problem-solving beyond onboard hardware limits.

HubotVerse: Towards Internet of Human and Intelligent Robotic Things

with a Digital Twin-Based Mixed Reality Framework

Dandan Zhang, Ziniu Wu, Jin Zheng, Yifan Li, Zheng Dong, Jialin Lin

Although the Internet of Robotic Things (IoRT) has enhanced the productivity of robotic systems in conjunction with the Internet of Things (IoT), it does not inherently support seamless human–robot collaboration. This article presents HuBotVerse, a unified framework designed to foster the evolution of the Internet of Human and Intelligent Robotic Things (IoHIRT). HuBotVerse is advantageous due to its unique features, including security, user-friendliness, manageability, and its open source nature. Moreover, this framework can seamlessly integrate various human–robot interaction (HRI) interfaces to facilitate collaborative control between humans and robots. Here, we emphasize a digital twin (DT)-based mixed reality (MR) interface, which enhances teleoperation efficiency by offering users an intuitive and immersive way to interact with. To evaluate the effectiveness of HuBotVerse, we conducted user studies based on a pick-and-place task. Feedback was gathered through questionnaires, complemented by a quantitative analysis of key performance metrics, user experience, and the National Aeronautics and Space Administration Task Load Index (NASA-TLX). Results indicate that the fusion of MR and HuBotVerse within a comprehensive framework significantly improves the efficiency and user experience of teleoperation. Moreover, the follow-up questionnaires reflect the advantages of the HuBotVerse framework in terms of evident user-friendliness, manageability, and usability in home-care or health-care applications.

For codes, project videos, tutorials, technical details, case studies, and Q&A, please check our website

Towards the New Generation of Smart Home-Care with Cloud-Based Internet of Humans and Robotic Things

Dandan Zhang; Jin Zheng

The burgeoning demand for home-care services, driven by a rapidly aging global population, necessitates innovative solutions to alleviate the burden on caregivers and enhance care quality. This paper introduces the development of an Internet of Human and Robotic Things (IoHRT) framework, which synergizes cloud computing and the Internet of Robotic Things (IoRT) with human-robot collaborative control mechanisms for home-care applications. The IoHRT framework is designed to enable the seamless integration of customizable robotic platforms with modular, scalable, and compatible features, thereby creating a dynamic and adaptable home-care ecosystem. By leveraging the scalability and computational power of cloud computing, the framework facilitates real-time data analysis and remote monitoring, thus enhancing the efficiency and effectiveness of home-care. We present an in-depth analysis of the key characteristics of IoHRT, supported by evidence embedded in our design, and conduct user studies to evaluate the framework from users' perspectives. We demonstrate the performance and utility of our proposed framework for the future of home-care applications.

Research Theme 2: Interaction Interfaces

We develop rich, multimodal interaction interfaces that go beyond speech-based communication to enable more natural and effective human–robot interaction.

Our work explores gesture-based interaction, visual cues, and haptic feedback, allowing robots to communicate information and intent in intuitive ways. In particular, we design haptic interfaces that provide tactile feedback to users, enhancing situational awareness and user confidence in tasks requiring high precision or sensory validation, such as manipulation, surgery, and teleoperation.

Digital Twin-Driven Mixed Reality Framework for

Immersive Teleoperation With Haptic Rendering

Wen Fan, Xiaoqing Guo, Enyang Feng, Jialin Lin, Yuanyi Wang, Jiaming Liang, Martin Garrad, Jonathan Rossiter,

Zhengyou Zhang, Nathan Lepora, Lei Wei, Dandan Zhang

Teleoperation has widely contributed to many applications. Consequently, the design of intuitive and ergonomic control interfaces for teleoperation has become crucial. The rapid advancement of Mixed Reality (MR) has yielded tangible benefits in human-robot interaction. MR provides an immersive environment for interacting with robots, effectively reducing the mental and physical workload of operators during teleoperation. Additionally, the incorporation of haptic rendering, including kinaesthetic and tactile rendering, could further amplify the intuitiveness and efficiency of MR-based immersive teleoperation. In this study, we developed an immersive, bilateral teleoperation system, integrating Digital Twin-driven Mixed Reality (DTMR) manipulation with haptic rendering. This system comprises a commercial remote controller with a kinaesthetic rendering feature and a wearable cost-effective tactile rendering interface, called the Soft Pneumatic Tactile Array (SPTA). We carried out two user studies to assess the system's effectiveness, including a performance evaluation of key components within DTMR and a quantitative assessment of the newly developed SPTA. The results demonstrate an enhancement in both the human-robot interaction experience and teleoperation performance.

Main Contributions:

-

Integrating the DT technique into an MR-based immersive teleoperation system, leading to the development of the Digital Twin-driven Mixed Reality (DTMR) framework.

-

Building a novel haptic rendering system based on i) pneumatically-driven actuators for tactile feedback on human fingertips, and ii) a commercial haptic controller that enables kinaesthetic rendering and real-time motion tracking.

-

Constructing a seamless bilateral teleoperation framework through the integration of immersive manipulation via DTMR and the novel haptic rendering device, which includes both kinaesthetic and tactile rendering.

A Digital Twin-Driven Immersive Teleoperation Framework

for Robot-Assisted Microsurgery

Peiyang Jiang, Dandan Zhang

This paper presents a novel digital twin (DT)-driven framework for immersive teleoperation in the domain of robot-assisted microsurgery (RAMS). The proposed method leverages the power of DT to create an interactive, immersive teleoperation environment using mixed reality (MR) technology for surgeons to conduct robot-assisted microsurgery with higher precision, improved safety, and higher efficiency. The simulated tissue can be highlighted in DT, which provides an intuitive reference for the operator to conduct micromanipulation with safety, while the MR device can provide operators with the 3D visualization of a digital microrobot mimicking the motions of the physical robot and the 2D microscopic images in real-time.

We evaluated the proposed framework through user studies based on a Trajectory Following task and conducted comparisons between with and without using the proposed framework. The NASA-TLX questionnaire, along with additional evaluation metrics such as total trajectory, time cost, mean velocity, and a predefined collision metric, were used to analyze the user studies.

Results indicated that the proposed DT-driven immersive teleoperation framework can enhance the precision, safety, and efficiency of teleoperation, and brings satisfactory user experience to operators during teleoperation.

Research Theme 3: Human-Robot Shared Control

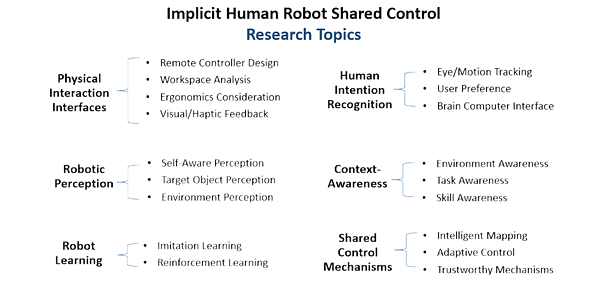

We study human–robot shared control frameworks that combine human intuition and decision-making with robotic autonomy. Our research focuses on adaptive shared control strategies in which control authority is dynamically balanced between the human and the robot based on task context, user intent, and environmental uncertainty. By integrating multimodal sensing, learning-based models, and real-time feedback, we aim to improve safety, performance, and user trust in complex manipulation and interaction tasks.

Here shows our work related to implicit human-robot shared control.

Human-Robot Shared Control for Surgical Robot

Based on Context-Aware Sim-to-Real Adaptation

Dandan Zhang; Zicong Wu; Junhong Chen; Ruiqi Zhu; Adnan Munawar; Bo Xiao; Yuan Guan

Hang Su; Yao Guo; Gregory Fischer; Benny Lo; Guang-Zhong Yang

Human-robot shared control, which integrates the advantages of both humans and robots, is an effective approach to facilitate efficient surgical operations.

Learning from demonstration (LfD) techniques can be used to automate some of the surgical subtasks for the construction of the shared control mechanism. However, a sufficient amount of data is required for the robot to learn the manoeuvres. Using a surgical simulator to collect data is a less resource-demanding approach. With sim-to-real adaptation, the manoeuvres learned from a simulator can be transferred to a physical robot. To this end, we propose a sim-to-real adaptation method to construct a human-robot shared control framework for robotic surgery.

In this paper, a desired trajectory is generated from a simulator using LfD method, while dynamic motion primitives (DMP) is used to transfer the desired trajectory from the simulator to the physical robotic platform. Moreover, a role adaptation mechanism is developed such that the robot can adjust its role according to the surgical operation contexts predicted by a neural network model.

The effectiveness of the proposed framework is validated on the da Vinci Research Kit (dVRK). Results of the user studies indicated that with the adaptive human-robot shared control framework, the path length of the remote controller, the total clutching number and the task completion time can be reduced significantly. The proposed method outperformed the traditional manual control via teleoperation.